By Shaun Kleber

When junior Austin Burch took the Georgia High School Graduation Test diagnostic exams last November, he thought they were fairly easy. When he saw his scores in early January, however, he was shocked that he had failed—scored below a 70 percent on—two of the four core subjects. And then he noticed another problem: there were four scores on the page, but he had only been in school for three of the testing days. Burch had received a score for a test he never took.

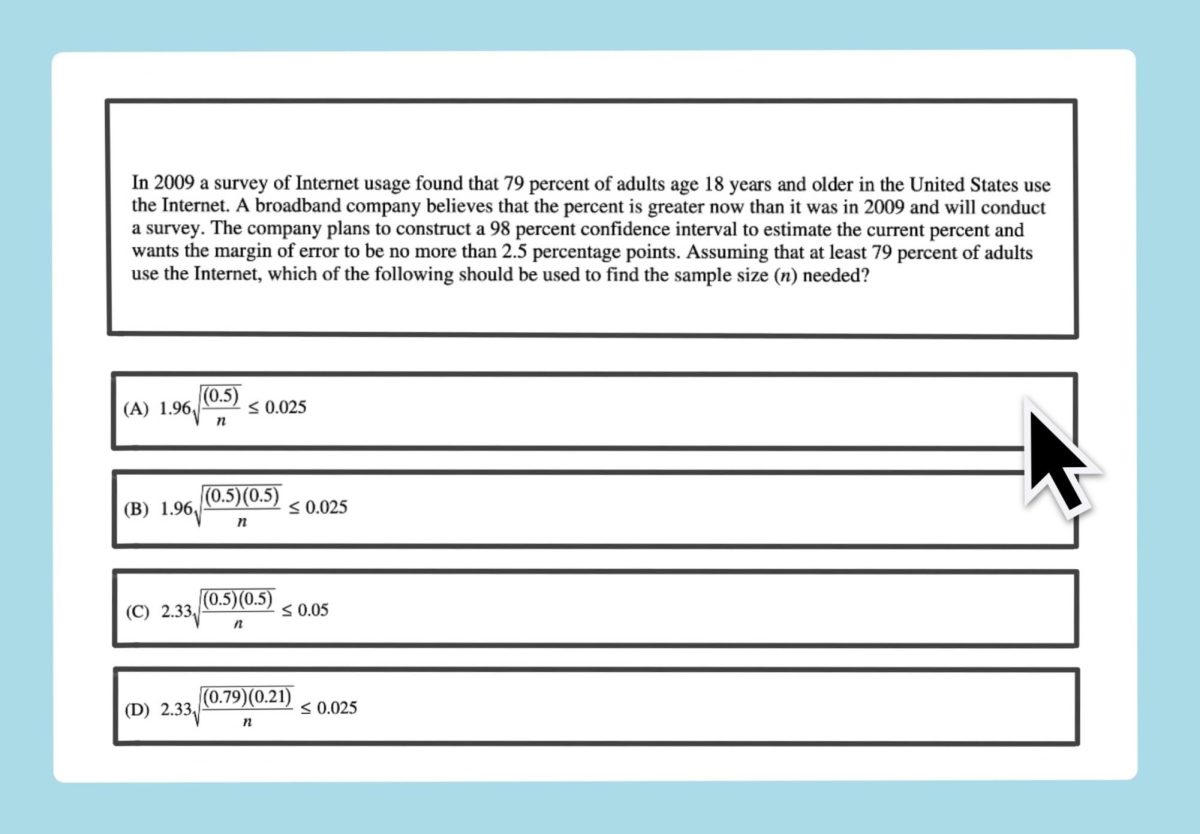

Last fall, all juniors took diagnostic tests with the intention of determining their preparedness for the GHSGT that they will be taking in March. The tests spanned four days—one day for each core subject—and students dedicated approximately two hours each day to testing. Grady GHSGT coordinator and math teacher Alvie Thompkins, who made, administered and graded the tests, said this year’s diagnostic scores were the lowest she’s ever seen. A Southerner survey of 270 juniors found that approximately 97 percent of students failed at least one section of the diagnostic tests, and a review of two of the tests revealed several errors in the tests themselves. Many students, parents and even some teachers believe that there were flaws in the process that rendered the test scores unreliable and meaningless as indicators of student preparedness.

Students and teachers contend that errors in the diagnostic tests themselves and possible flaws in the grading may have contributed to the abysmal scores.

Junior Julia Rapoport recalled missing passages on the language arts test, misnumbered questions throughout, questions with no correct answer or multiple correct answers and some questions that she could not read.

While Thompkins did not provide test packets for this story, The Southerner found a box containing the math and language arts sections in a recycling bin and confirmed the errors reported on both tests. The language arts test had repeated questions, a missing passage and another passage that was cut short. The math test had six errors with the numbering of the questions, meaning some numbers were skipped or used twice. Since these tests were taken on Scantrons, one numbering error could skew the entire test.

“The math test had the most mess-ups, so many that we had to take it a second time,” Rapoport said. “Each [test] had four or five [errors] at least.”

Junior Stayce Evans estimated that 20 percent of the test questions were flawed in some way.

“How could you possibly grade that [test] and get a score out of it when there were so many errors?” Evans said. “It couldn’t have been correct, no way possible. People failed who never fail, and people passed who never pass.”

One teacher called the testing environment and the process itself a “circus” but requested anonymity because “politically, it feels dangerous to speak out against anything that’s for GGT prep. Anything done in the name of GGT prep is kind of seen as sacred. You’re not supposed to say anything about it.”

The teacher posed two scenarios to explain the scores.

“Either the test was incorrectly graded, which makes it completely invalid, or [the scores] were purposely deflated to encourage kids to attend tutorials,” the teacher said. “That’s just wrong. You need to give students an honest evaluation of where they are. On the surface, it doesn’t seem like it was.”

Thompkins, on the other hand, offered alternative explanations. First, Thompkins said, students did not take the diagnostic tests seriously because it was not being graded for a class. Additionally, she said, many students may have forgotten or never learned some material that was on the tests.

“The diagnostic test scores are not expected to be high,” Thompkins said. “We’re not disappointed. … I don’t want anyone to look at these scores and think they are negative.”

Rapoport said she took the tests seriously but still failed two of them.

“I always take tests as if they are real tests,” Rapoport said. “I didn’t go in and ‘Christmas-tree’ [them] or anything. I read every question [and] tried to figure out the answer.”

Grady parent Michelle Rapoport, Julia’s mother, finds it hard to believe that all students did not take the tests seriously.

“If you’re going to administer a test and you know the kids aren’t going to take it seriously, then what’s the point?” Rapoport said.

Another teacher, who wished to remain anonymous because she said she has respect for her colleagues and does not want to sabotage what she views as a well-intended effort, agreed that students may not have taken the test seriously but does not think that is the only explanation for the failing scores. She also attributes the low scores to flaws in the diagnostic tests.

“The benchmarks that were given this year were terrible,” the teacher said, adding that she only saw the tests for the subject she teaches. “It seemed to me that whoever put that test together drew from some other sources fairly quickly. There wasn’t a lot of double-checking to make sure that test was actually a diagnostic.”

Thompkins explained that she did draw the test questions from an outside source, USATestprep, and she firmly stands by their accuracy. She believes she only made two mistakes across all four tests, one on the math test and one on the language arts test, and she left those two questions out when grading them.

Thompkins maintained that questions on the language arts test that were missing the corresponding reading passage were not flawed because there was enough information in the questions to answer them without the passage.

“It is possible to make a mistake,” Thompkins said. “It’s human error.”

In early January, Thompkins sent a letter to parents with their child’s scores, explaining that if their child scored below an 85 percent on any test, he or she needs to attend tutorials every Wednesday or Thursday and every Saturday. The unsigned letter, written by Thompkins and printed on Murray’s letterhead, was eight sentences long and had 10 grammatical and spelling errors.

Michelle Rapoport does not trust the validity of the diagnostic scores and believes the letter only added insult to injury. She feels confident that many other parents feel the same way.

“It’s an embarrassment,” Rapoport said. “I thought it was an insult to both the parents and the kids to send that letter home without some explanation.”

Rapoport believes that when students who have done well on national standardized tests, such as the PSAT and SAT, failed this diagnostic test, it shows there is something wrong with the diagnostic.

“You’re not going to tell me the PSAT was wrong…and the SAT was wrong,” Rapoport said. “I’m going to trust the national test.”

Julia Rapoport agreed that this disconnect represents fault on the part of the diagnostic tests. Also, stories like Burch’s make Julia Rapoport question the legitimacy of the scores.

The second teacher said her homeroom has some of the top PSAT scores in the school, which is part of the reason why she is questioning her students’ low diagnostic test scores.

“The fact that no one in [my] homeroom…met the standard [of scoring an 85 percent or better] led me to believe that maybe the test wasn’t valid,” the teacher said.

The first teacher noticed the same discrepancy between students’ past academic performance and their performance on these diagnostic tests.

“The information that I’m getting back just doesn’t quite mesh with what I know about these students,” the teacher said. “Higher-achieving students should get higher scores, and lower-achieving students should get lower scores. It just doesn’t make sense.”

Michelle Rapoport said the school needs to explain what happened.

“My problem is why they would send home a letter with those scores when they have to know that those scores are not correct,” Rapoport said. “If they think they are correct, we have a major problem. It doesn’t make any sense.”

Thompkins said that every year a majority of the junior class fails the diagnostic tests, but after many of them come to tutorials, they end up passing the GHSGT in the spring.

She noted that an average of 180 juniors came every Saturday last year, which was approximately three-fourths of the class. This year, she expects to have at least 220 of the 324 juniors attend tutorials regularly.

Senior Erin Bailie said she failed the diagnostic tests last year but received “pass-plus” scores on the GHSGT without attending tutorials. She believes that the recurring problem of having so many people fail these diagnostic tests is a scare tactic.

“I think they are intentionally making the scores low to scare kids,” Bailie said. “If you hear the word ‘fail’ or see a bad score, I think it scares you into trying harder or studying, whereas, otherwise, I don’t think students would take the GGT seriously.”

Bailie said that since the scores don’t go on students’ permanent records but seem to get them to study for the GHSGT, it may be an effective tactic.

When confronted with the idea that she had intentionally lowered scores as a scare tactic, Thompkins pulled out a thick packet, which contained what appeared to be every junior’s name and listed their scores on all four tests.

“I don’t make this up,” Thompkins said. “[These tests are] a whole lot of work. I do this all on my own time. So instead of people questioning it, maybe they should say ‘thank you.’”

Evans attended one of the GHSGT tutorials on Feb. 16 and said they are “useful” but are not a substitute for a full class.

Michelle Rapoport believes that the low diagnostic scores have an adverse affect on the tutorial environment and the students who really need the tutorials.

“If these scores are invalid, and kids who don’t need tutorial are being told they should go to tutorial, then…they take valuable time from people who should be there,” Rapoport said. “It’s unfair to everybody.”

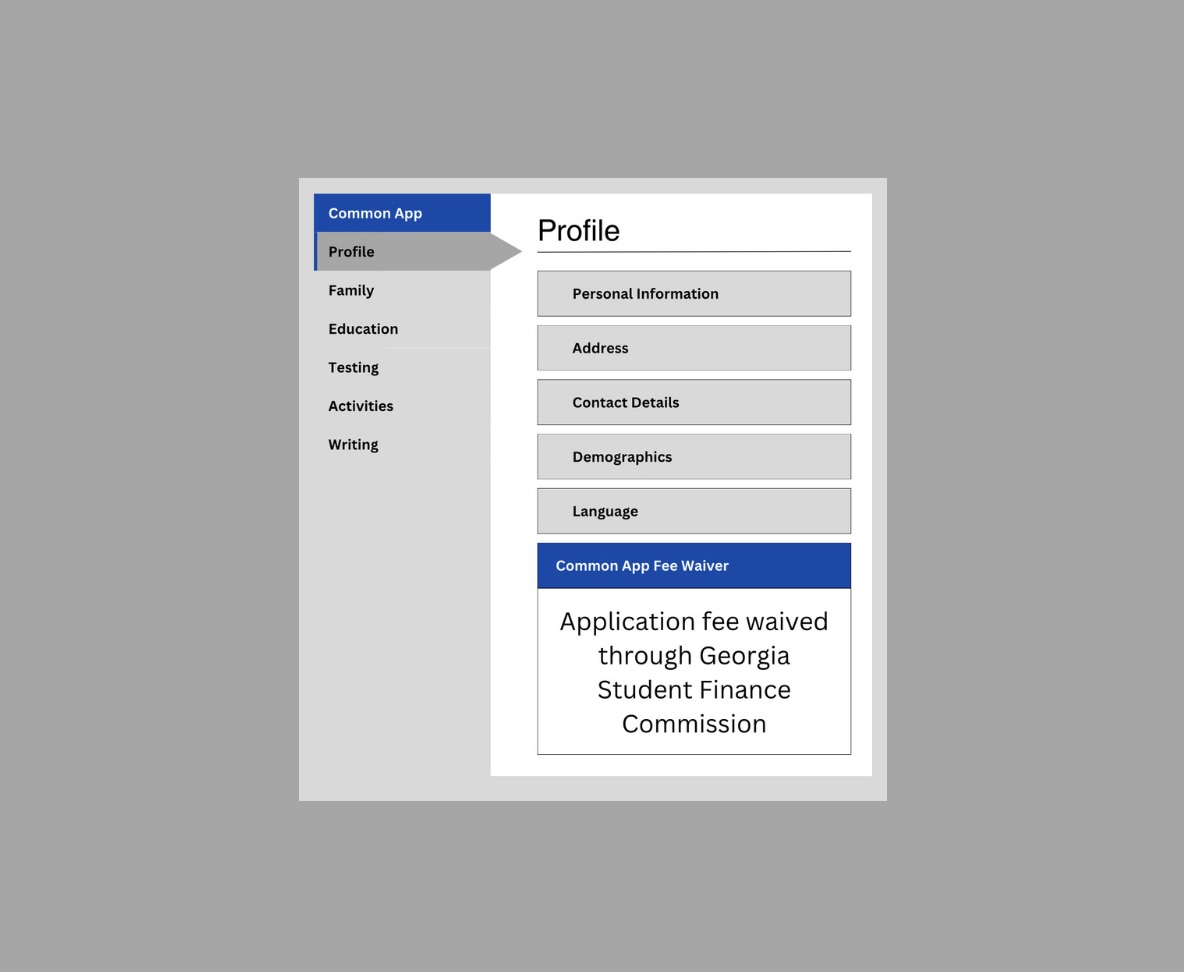

Murray understands that the tests were flawed but hopes that students will still attend tutorials.

“I don’t know where the flaw was,” Murray said. “I know it wasn’t the most academically solvent diagnostic test, but I also know that it will [help] those children who are economically disadvantaged by getting them the support that they need, whether it was flawed or not.”

While parents have received letters outlining their children’s scores on the diagnostic tests, teachers and even administrators remain in the dark as to how students fared.

Murray said he never saw the letter or the diagnostic test scores, but Thompkins claimed she showed him both.

Thompkins also claimed to have shared the scores with “all the teachers involved,” but when The Southerner asked several teachers who teach junior language arts, math, science or social studies classes, they said they had not received any of their students’ scores other than on the letters they were told to hand out to their homerooms.

Instructional coach Kimberly George-Reid, like Murray, said she never saw the scores or the letter.

“It doesn’t have to go through me,” George-Reid said. “It’s Ms. Thompkins’ program. I really don’t know anything about her process.”

Julia Rapoport is frustrated that she was not given her tests and answer sheets in order to look over what she did wrong.

“I don’t think it’s fair for them to give us a letter with scores on it without letting us see the tests and Scantrons and be able to look over what we got wrong,” Rapoport said.

The second teacher found the lack of transparency disturbing.

“I think it is inexcusable that, given the rationale that they gave, which was to identify students who needed assistance, that teachers were not given information about the scores,” the teacher said. “But given how bad the test was, I don’t think the information is relevant.”

Despite two weeks of daily requests, Thompkins did not provide test scores, test packets or answer keys by press time.

Michelle Rapoport can only hope that, given the past and current problems with the GHSGT diagnostic tests, these issues will be fixed for future junior classes.

“I hope that we don’t continue to repeat this process…since we already have before,” Rapoport said. “If we are going to do some kind of diagnostic test, it should be meaningful, and if it’s not going to be meaningful, then we shouldn’t bother doing it.”

Bailie believes that the entire process should be eliminated, and that juniors should be sent home with practice tests to look over themselves or maybe during advisement or core classes.

“I don’t think it’s worth it to make the juniors miss even more class,” Bailie said.

The first teacher disagreed, arguing that the diagnostic tests are necessary but need to be created by a team of teachers rather than just one to ensure accountability, transparency and “some double-checking.”

The second teacher said that, while she believes the diagnostic tests are “well-intended,” she has been assured by her teacher leader and other administrators that it will not be handled the same way in the future.

Thompkins said she is bothered that people believe she fabricated scores but will continue to work towards bettering Grady’s test scores.

“I know I’m going to leave Grady in better shape than I found it, as far as the Georgia High School Graduation Test,” Thompkins said. “To have a child think that I would cheat them—no, I work too hard for that.”

This story earned an Honorable Mention distinction in the News category of the 2011 National Scholastic Press Association Story of the Year competition. It also earned a Superior rating in the In-Depth News Category at 2011 Georgia Scholastic Press Associations Awards Assembly held on April 28, 2011. The page designed earned Honorable Mention honors in the 2011 National Scholastic Press Association Design of the Year competition.