ChatGPT must be critically evaluated, approached with caution

January 27, 2023

Artificial intelligence has surged in popularity recently, leading to the increased development of more and more tools that are quickly becoming viral online. While it is an incredibly valuable resource with huge potential, it is also extremely risky. With how AI is currently being developed, it will create problems in the future, and these problems should be addressed before it is too late.

AI can now hold conversations, generate images, write school assignments, create voices and complete seemingly any task, simplifying and assisting in day-to-day life. However, it can also be biased, easily abused, and could potentially take away jobs from real-life people.

A newly introduced AI, ChatGPT, has become the main subject of these concerns. The platform uses AI to interact with users in a conservational way. It can answer follow-up questions based on previous information, admit mistakes, challenge incorrect premises, and reject inappropriate requests, according to its website. ChatGPT is trained by AI trainers through a reinforcement learning model using data samples, but despite this, the AI can still be incorrect, even when its response is plausible-sounding.

Another major issue involving AI is bias. When asking ChatGPT if it has any biases, the AI said no because as a machine, it doesn’t have any personal beliefs. However, because its training data is from the internet, it is possible that the AI’s training data may contain biases. This, in turn, could lead to results that are unintentionally biased. Additionally, ChatGPT is not the only AI platform that has this issue. As long as the AI uses data from the internet, this problem will persist.

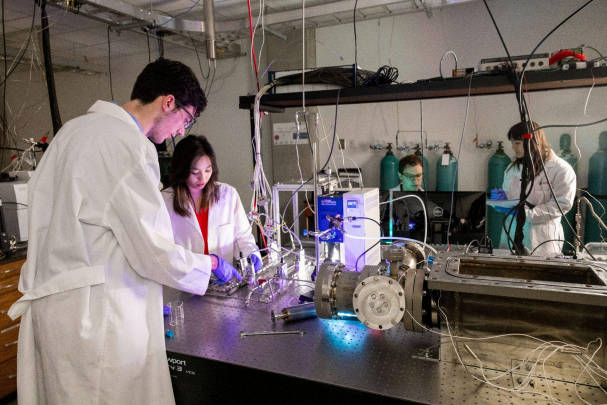

Galactica, an AI platform by Meta, was shut down three days after its release because of inaccurate and racist results. Like ChatGPT, Galactica is a large language model. The only difference between it and ChatGPT, is that Galactica used 48 million text examples while ChatGPT used 570GB or 300 billion words to learn. When more information is used for training, the possibility of bias is increased.

As more and more people utilize AI for writing, it is important to acknowledge these issues. AI is simply a tool — it does not have any morals and cannot understand the consequences of its responses. People, especially students, also need to understand that AI cannot be a solution for when they don’t want to complete work.

Another one of the biggest concerns surrounding ChatGPT is over its ability to complete students’ schoolwork. Cheating has always been a serious issue for Midtown students, and now some have turned to AI. By copy and pasting a prompt into an AI writer, such as ChatGPT, many become under the impression that they can not only get away with cheating, but will also receive factual responses.

However, even after using paraphrasing websites such as QuillBot, which is also powered by AI, it is possible for AI writing to be traced back. After using a sample paragraph written by ChatGPT, paraphrasing it several times using QuillBot, and pasting it back into ChatGPT, asking if the AI wrote it, the AI could recognize its writing. Furthermore, it needs to be reiterated that not all AI responses are accurate because according to ChatGPT’s website, when training AI, there’s no perfect source of truth online.

For now, users need to be cautious and critically evaluate all AI-produced work to ensure that it is accurate, unbiased and used appropriately. AI has so much potential and is already an incredible tool, however, it needs to continue to be developed carefully so that we take steps toward a more automated future without consequences.