The use of AI has surged in recent years, while the content being created has become increasingly realistic to the point where it appears genuine. This development poses a substantial threat to the future of media, as these AI videos have the potential to distort narratives and create a false sense of reality.

AI is routinely used by 66% of people worldwide. Beyond that, almost 50% of people believe they can trust AI. These users utilize AI for a wide range of tasks, spanning from grammar recommendations to creating accurate depictions of people and places. Over time, AI’s capability to create imagery has only improved. AI users now can create realistic content by simply entering a prompt with a few outlines. Despite being a newer phenomenon, this AI feature has already had an impact on past political events, where AI content was used to promote a candidate, which incorrectly represented the actual situation and spread false information.

In the 2024 election, an AI-generated image of Taylor Swift circulated around the internet, in which she was depicted in an Uncle Sam outfit, showing her support for President Donald Trump. This image, which Trump shared, led her to officially endorse Kamala Harris in order to correct the false information at hand. Due to Taylor Swift having a large platform, her support or lack thereof can serve as a significant influence on the decisions of her followers. That said, even the smallest of AI propaganda can majorly sway the choices of numerous people.

In the short period since the election, AI has only continued to improve, with the reach of the internet amplifying its audience. This AI phenomena will likely affect future political events, too. It has significantly improved in its ability to create realistic audio and videos, which allows users to create endless media of political candidates, potentially ruining their credibility. If voters are wrongly informed about this information, they may be inclined to change their opinions, which undermines the election’s validity.

AI is often spread through various social media platforms, including TikTok, Instagram and YouTube. The realism of AI is demonstrated by a recent social media post, which shows a group of bunnies jumping on a trampoline, and which has accumulated more than 20 million likes and 200 million views. While the subject matter of this video may seem harmless, the video itself shows how scarily accurate and convincing AI can be. AI’s ability to fool millions of viewers with deepfake videos brings into question the future of authentic media.

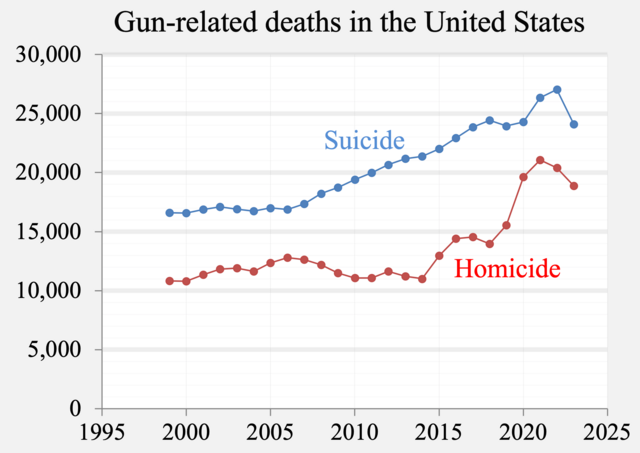

In addition to political implications, AI’s advancements in realism could have a massive impact on the legitimacy of the justice system. In the U.S., 6% of people in state prisons are wrongfully convicted. Additionally, the U.S. prisoner population was over 1.2 million people in 2022, meaning 72,000 people across the country are unfairly placed into prisons. That said, AI could be used to create new evidence or alter preexisting evidence. If this falsified evidence is taken to be true and not from AI, it can cause authenticity issues in court. This factor could put countless innocent people into prisons, in addition to those who already are. The current rate of wrongful conviction is already concerning, but the creation of AI evidence could threaten the security of the justice system as a whole.

AI’s advancements also have implications for the future of education. In the case of online work submissions and presentations, having the ability to create fake media along specific guidelines hinders the growth students can experience by giving them an easy workaround. This problem not only harms students during school, but it also hampers their future lives. Not having to do work both minimizes what students are learning and creates bad habits for future academic and professional settings.

To address this issue, policies need to be implemented to regulate AI use in published media. If limitations were put in place regarding AI content creation, they could help to ensure that the legitimacy of future media is not being compromised. AI will be able to create more and more persuasive false media, but regulating what is created can effectively prevent the spread of false information.

Because online media is a source of information used by millions of people around the world, exposure to even one post can drastically change someone’s opinion. It is necessary that the information people are exposed to is factual. Having the ability to make informed decisions ensures that these decisions accomplish their intended goal, which is paramount in critical events like elections.

In a media-centric world, it is vital that people are exposed to authentic information. This guarantees that voters are given a proper voice, are appropriately informed of candidates and can advocate for their values and beliefs. Overall, a regulated AI industry would ensure that less false media can be shared on the internet for malicious purposes.