Tech companies finally protect children from online sexual abuse

Behind large tech companies, vulnerable young children are exposed to predatory behavior and the worst aspects of an online presence.

December 17, 2021

Finally, a tech giant is making an ethical decision.

Apple Inc. has long-overdue plans to battle the epidemic of child sexual abuse material, which has been flowing through its medium at an alarming rate, putting young generations at risk.

Twenty-twenty and 2021 have been record-breaking years for abusive material found and reported due to Covid-19, which has increased online activity for both children and adults. Over 100,616 webpages were taken down between Jan. 14 and June 1, 2021 after being reported by the Internet Watch Foundation (IWF), a registered charity that seeks to minimize child sexual abuse material online. An overwhelming number of reports generated in 2021 have been “self-generated material,” where children have been tricked or coerced by online sexual predators.

Snapchat is one social media platform that is very popular with minors. With almost a billion users, Snapchat functions by having users send back-and-forth short, disappearing videos or images. This structure facilitates the spread of sexual abuse material for minors on a global scale. The experience of being asked to send images or receiving unwanted material by a near stranger through Snapchat messaging is not uncommon. The “minimum age” for Snapchat is 13, but it is easy to manipulate and attract users who are not educated on the dangers of social media to have a safe presence online.

This child sexual abuse crisis relies on technology platforms run by the world’s largest corporations. Different companies have surveillance software set in place that work on varying levels. However, in the face of emergency, Apple falls short with annual reports of just a few hundred cases of child sexual abuse material. With 1.65 billion Apple devices active worldwide, this number is staggeringly low, and unacceptable as the dangers of online activity are well-known. At a Congressional hearing in late 2019, the Federal Trade Commission, a bi-partisan group, told Apple that it had to make progress or it was at risk of negligence.

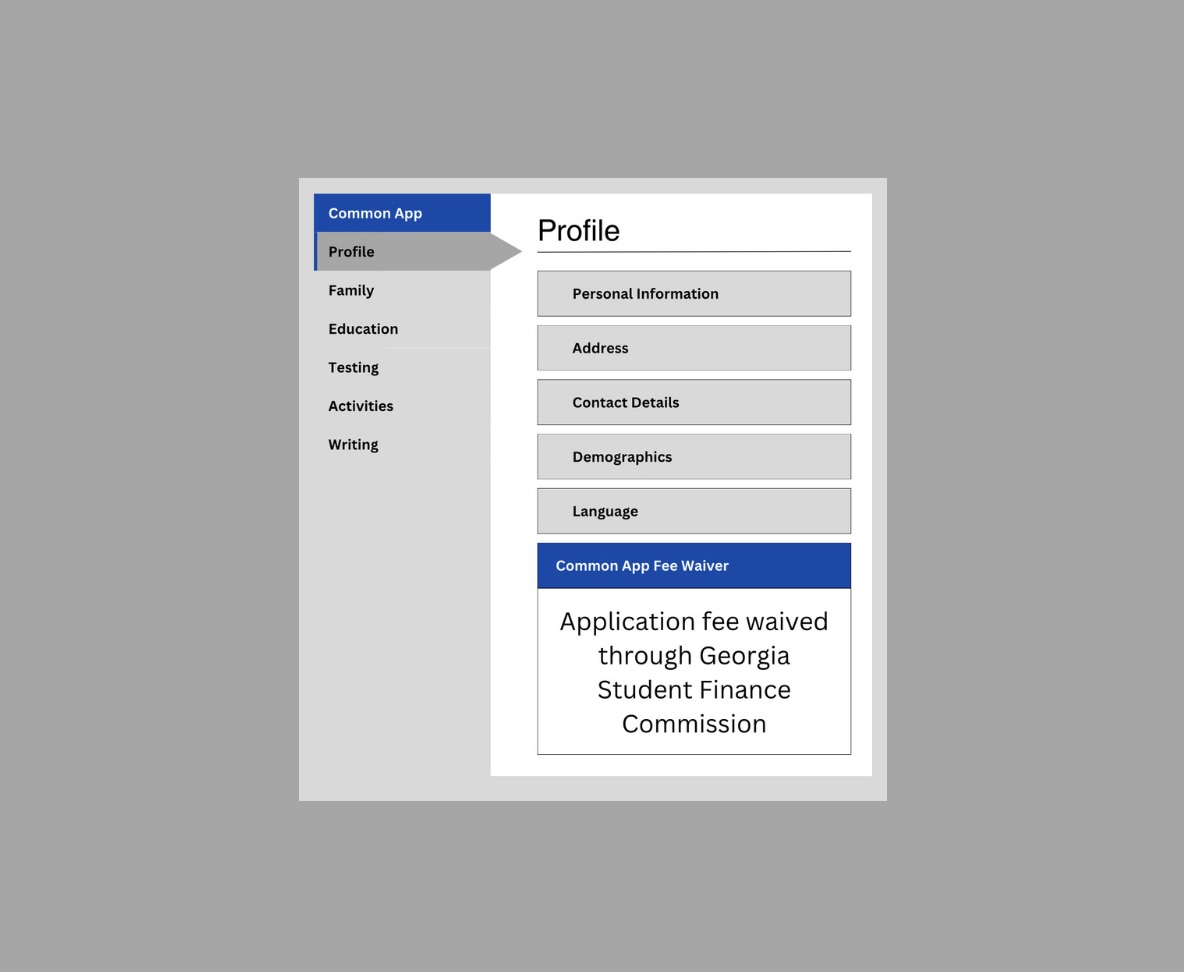

In August of 2021, Apple announced plans to institute higher surveillance to battle this issue. For starters, Apple’s virtual assistant, Siri, will offer resources on how to report child sexual abuse along with resources for those who need help. Apple will create a parent-controlled feature through Apple iMessages to notify them when their child, 13 and under, receives or tries to send a nude image and warns teenagers 13-17 before viewing an explicit image. And finally, Apple will use software that scans the photos on peoples’ phones to see if there are any matches in the database of images of child sexual abuse. These policies will help increase the safety of underage users significantly.

An artificial intelligence machine will scan the photos reducing each photo to an algorithmic equation which determines if it contains nudity or matches with sexual abuse imagery. This issue is not a binary choice; Apple can roll this out in a way that utilizes abuse detection technology and maintains user’s privacy. This has the unmatched capability to protect children from further abuse, prevent circulation online and apprehend offenders across Apple devices. Due to backlash of privacy concerns, Apple Inc. has decided to postpone and allow time to build support and refine the system before launching.

While some believe this is an invasion into user’s products, it will save millions of children’s lives from online exploitation. This shouldn’t be delayed any longer because, if implemented, it will be a big step that can turn the tide and create a safer world for children.